Running AI Models with Open WebUI

Open WebUI is a versatile web-based platform designed to integrate smoothly with a range of language processing interfaces, like Ollama and other tools compatible with OpenAI-style APIs. It offers a suite of features that streamline managing and interacting with language models, adaptable for both server and personal use, transforming your setup into an advanced workstation for language tasks.

This platform lets you manage and communicate with language models through an easy-to-use graphical interface, accessible on both desktops and mobile devices. It even incorporates a voice interaction feature, making it as natural as having a conversation.

Prerequisites for GPU and CPU VMs

For GPU VM

- GPUs: 1xRTXA6000 (for smooth execution).

- Disk Space: 40GB free.

- RAM: 40 GB.

- CPU: 24 Cores

For CPU VM

- Disk Space: 100GB free.

- RAM: 16 GB.

- CPU: 4 CPUs

Step-by-Step Process to Running AI Models with Open WebUI in the Cloud

For the purpose of this tutorial, we will use a GPU-powered Virtual Machine offered by NodeShift, as it provides the optimal configuration to achieve the fastest performance while running Open WebUI. NodeShift offers affordable Virtual Machines that meet stringent compliance standards, including GDPR, SOC2, and ISO27001, ensuring data security and privacy.

However, if you prefer to use a CPU-powered Virtual Machine, you can still follow this guide. Open WebUI works on CPU-based VMs as well, though performance may be slower compared to a GPU setup. The installation process remains largely the same, allowing you to achieve similar functionality on a CPU-powered machine. NodeShift’s infrastructure is versatile, enabling you to choose between GPU or CPU configurations based on your specific needs and budget.

Let’s dive into the setup and installation steps to get Open WebUI running efficiently on your chosen virtual machine.

Step 1: Sign Up and Set Up a NodeShift Cloud Account

Visit the NodeShift Platform and create an account. Once you've signed up, log into your account.

Follow the account setup process and provide the necessary details and information.

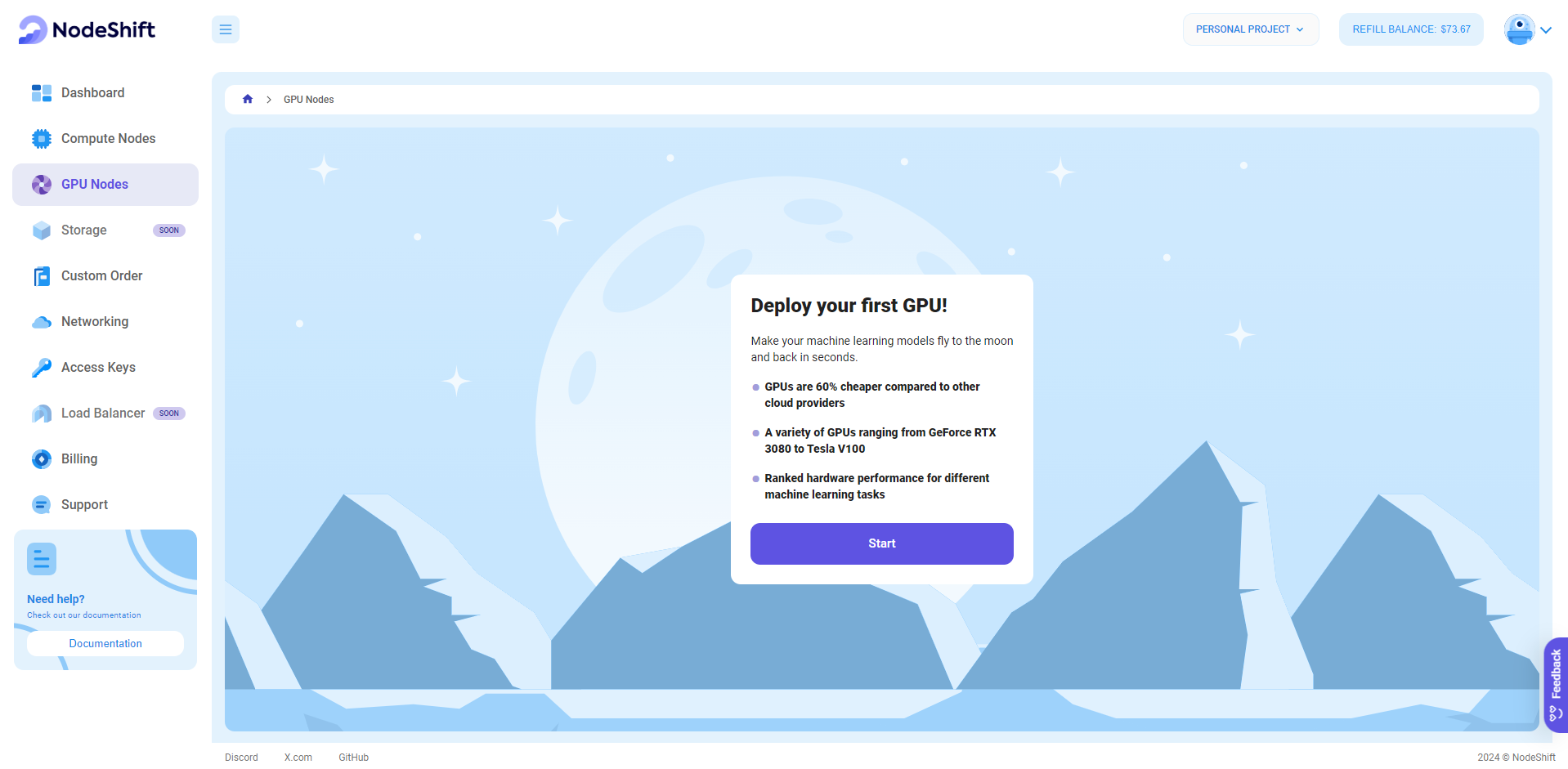

Step 2: Create a GPU Node (Virtual Machine)

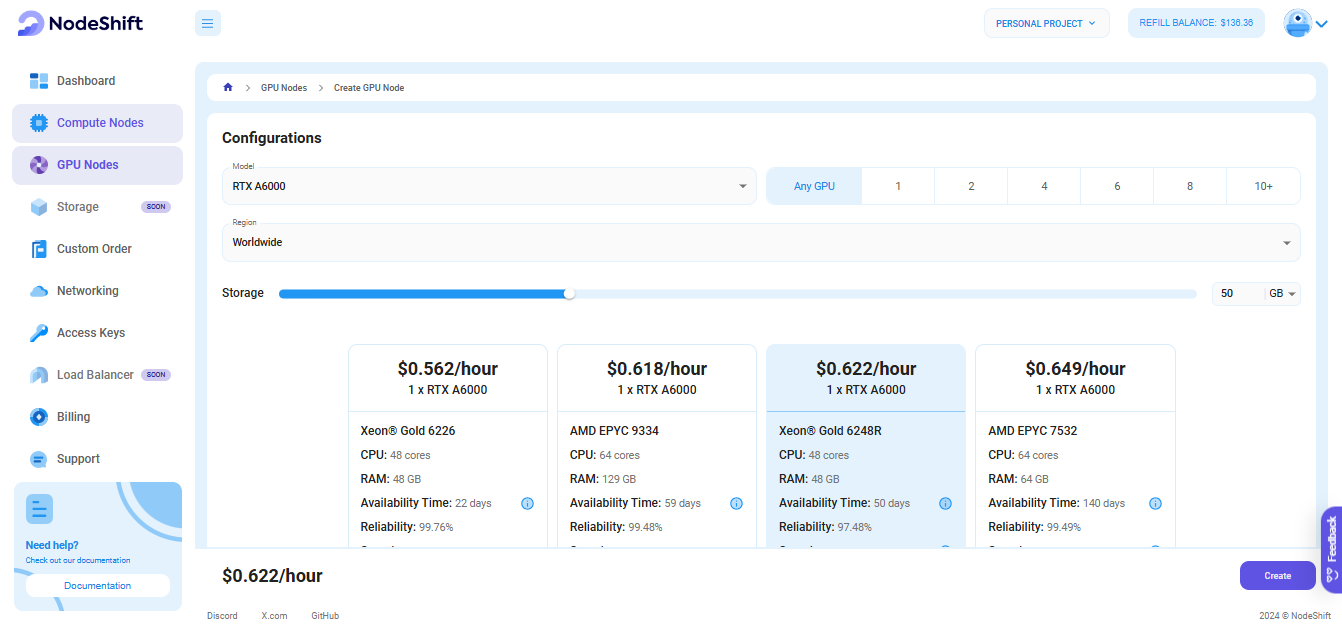

GPU Nodes are NodeShift's GPU Virtual Machines, on-demand resources equipped with diverse GPUs ranging from H100s to A100s. These GPU-powered VMs provide enhanced environmental control, allowing configuration adjustments for GPUs, CPUs, RAM, and Storage based on specific requirements.

Navigate to the menu on the left side. Select the GPU Nodes option, create a GPU Node in the Dashboard, click the Create GPU Node button, and create your first Virtual Machine deployment.

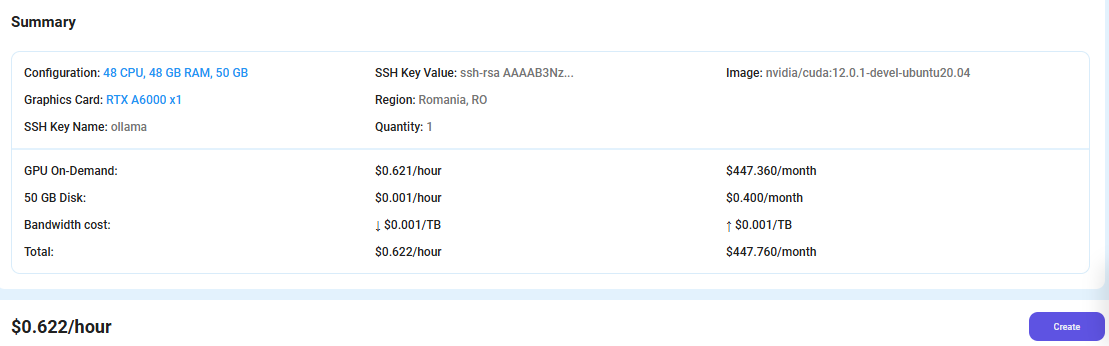

Step 3: Select a Model, Region, and Storage

In the "GPU Nodes" tab, select a GPU Model and Storage according to your needs and the geographical region where you want to launch your model.

We will use 1x RTX A6000 GPU for this tutorial to achieve the fastest performance. However, you can choose a more affordable GPU with less VRAM if that better suits your requirements.

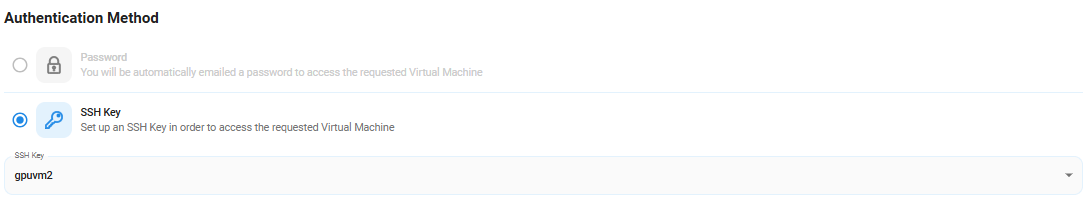

Step 4: Select Authentication Method

There are two authentication methods available: Password and SSH Key. SSH keys are a more secure option. To create them, please refer to our official documentation.

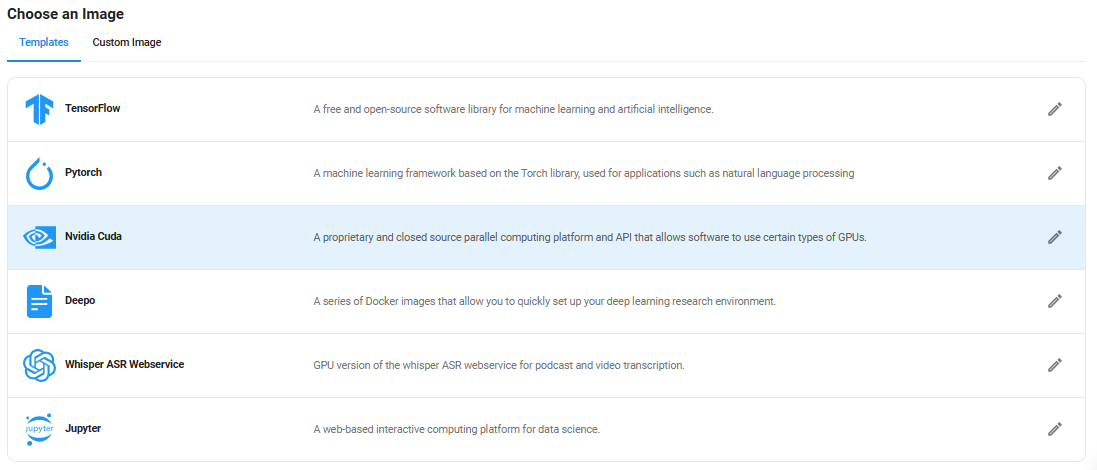

Step 5: Choose an Image

Next, you will need to choose an image for your Virtual Machine. We will deploy Open WebUI on an NVIDIA Cuda Virtual Machine. This proprietary, closed-source parallel computing platform will allow you to install Open WebUI on your GPU Node.

After choosing the image, click the 'Create' button, and your Virtual Machine will be deployed.

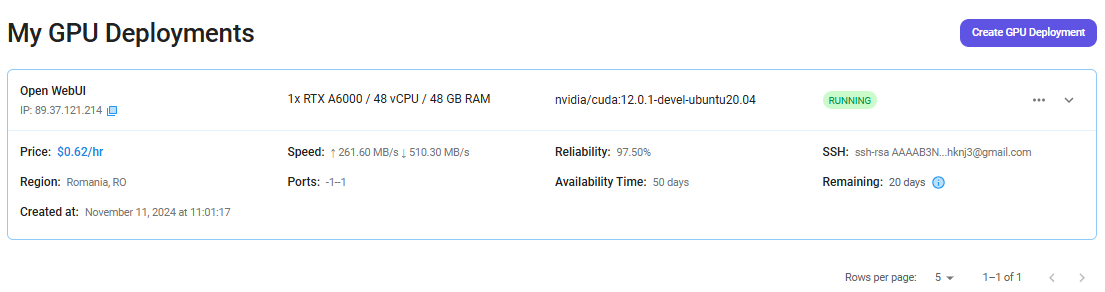

Step 6: Virtual Machine Successfully Deployed

You will get visual confirmation that your node is up and running.

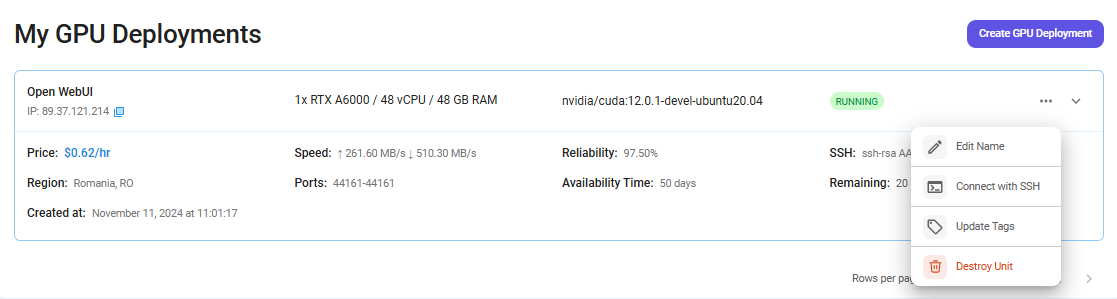

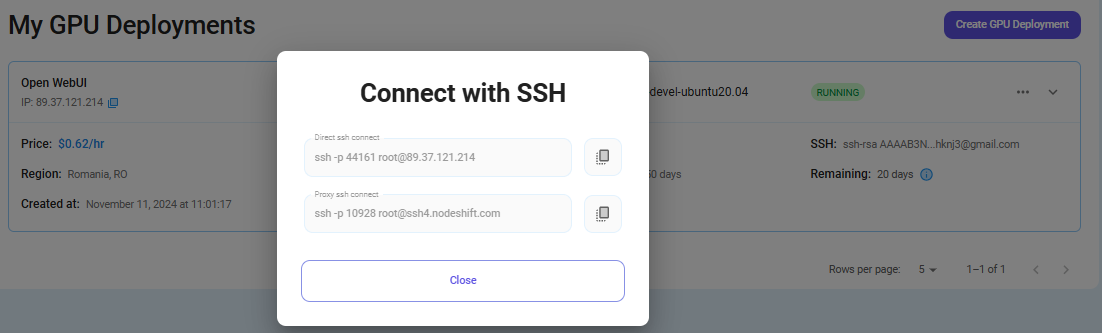

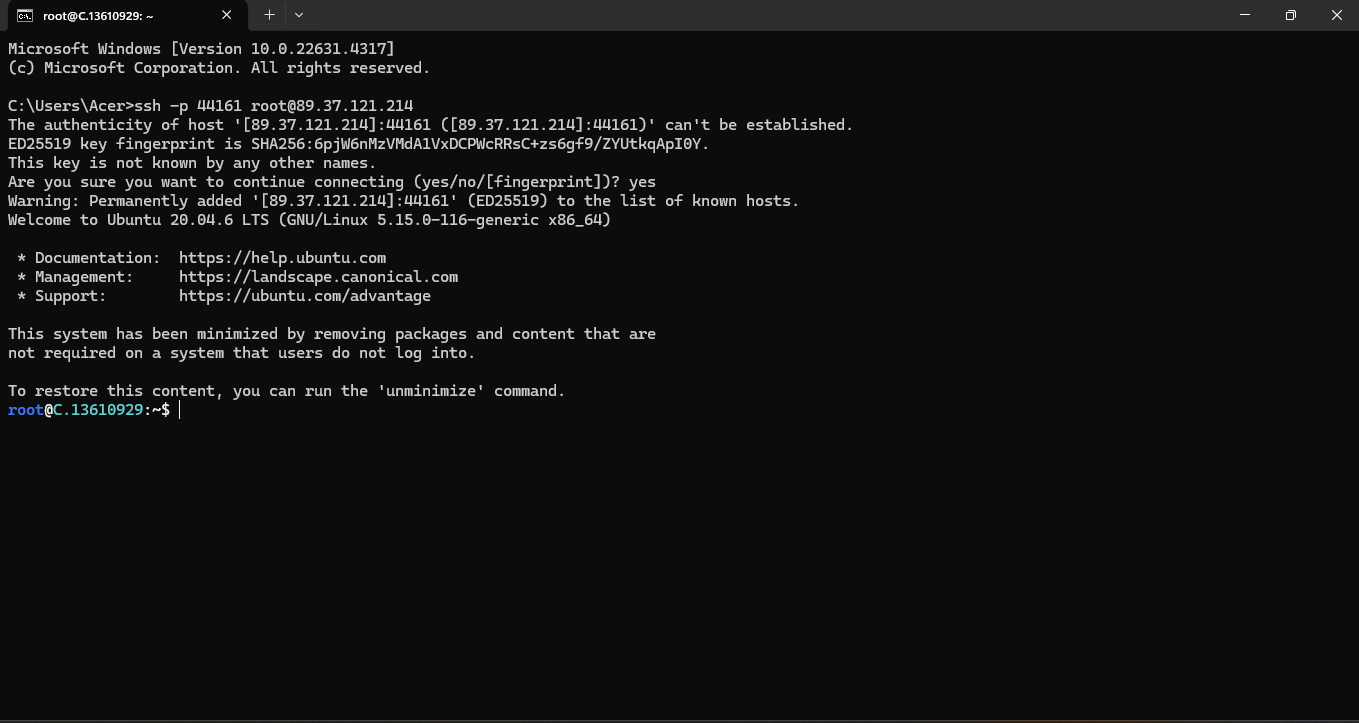

Step 7: Connect to GPUs using SSH

NodeShift GPUs can be connected to and controlled through a terminal using the SSH key provided during GPU creation.

Once your GPU Node deployment is successfully created and has reached the 'RUNNING' status, you can navigate to the page of your GPU Deployment Instance. Then, click the 'Connect' button in the top right corner.

Now open your terminal and paste the proxy SSH IP or direct SSH IP.

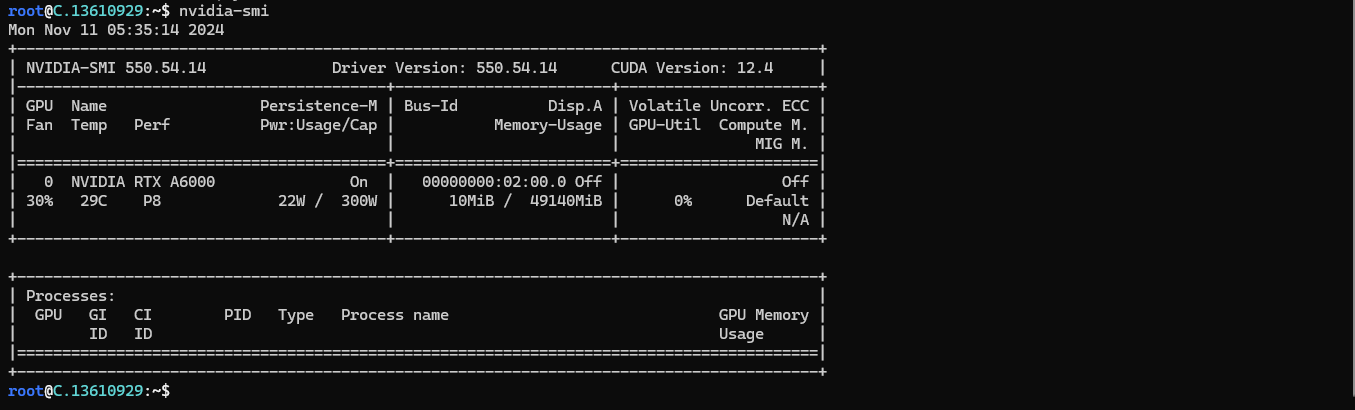

Next, if you want to check the GPU details, run the command below:

nvidia-smi

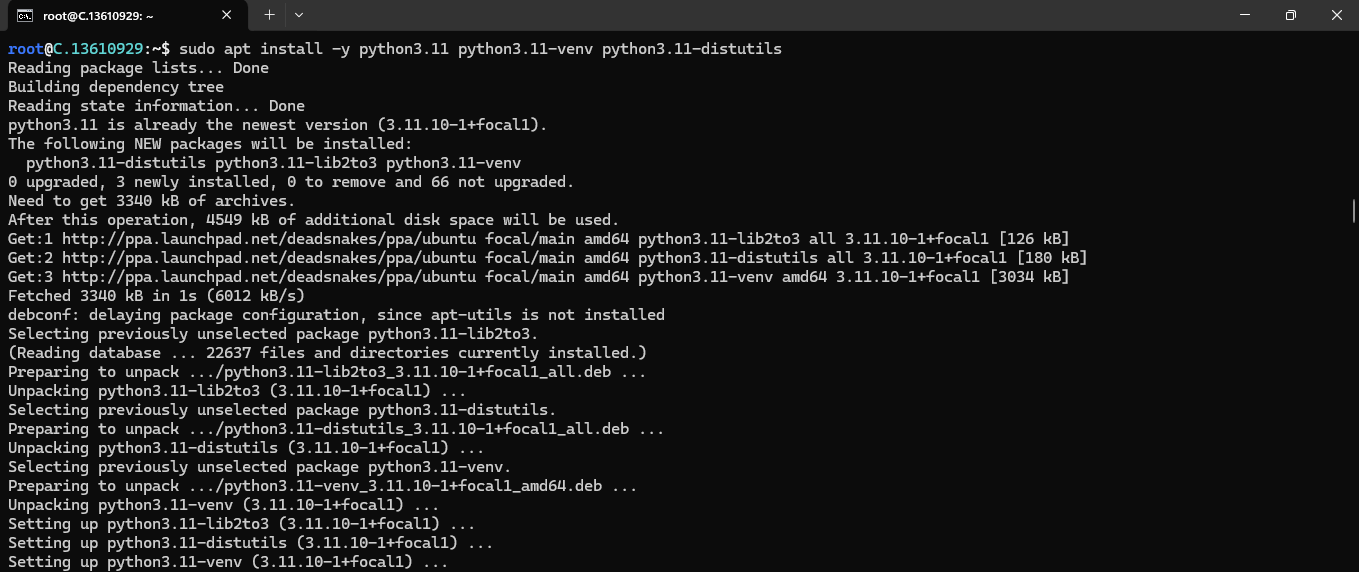

Step 8: Install Pip and Python

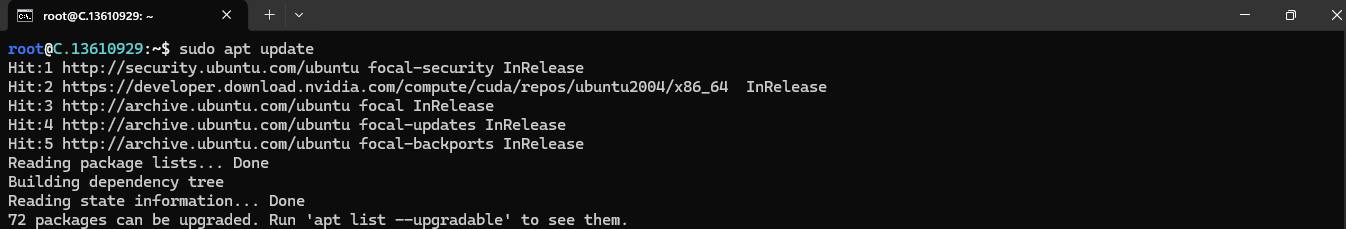

Run the following command to update the package List:

sudo apt update

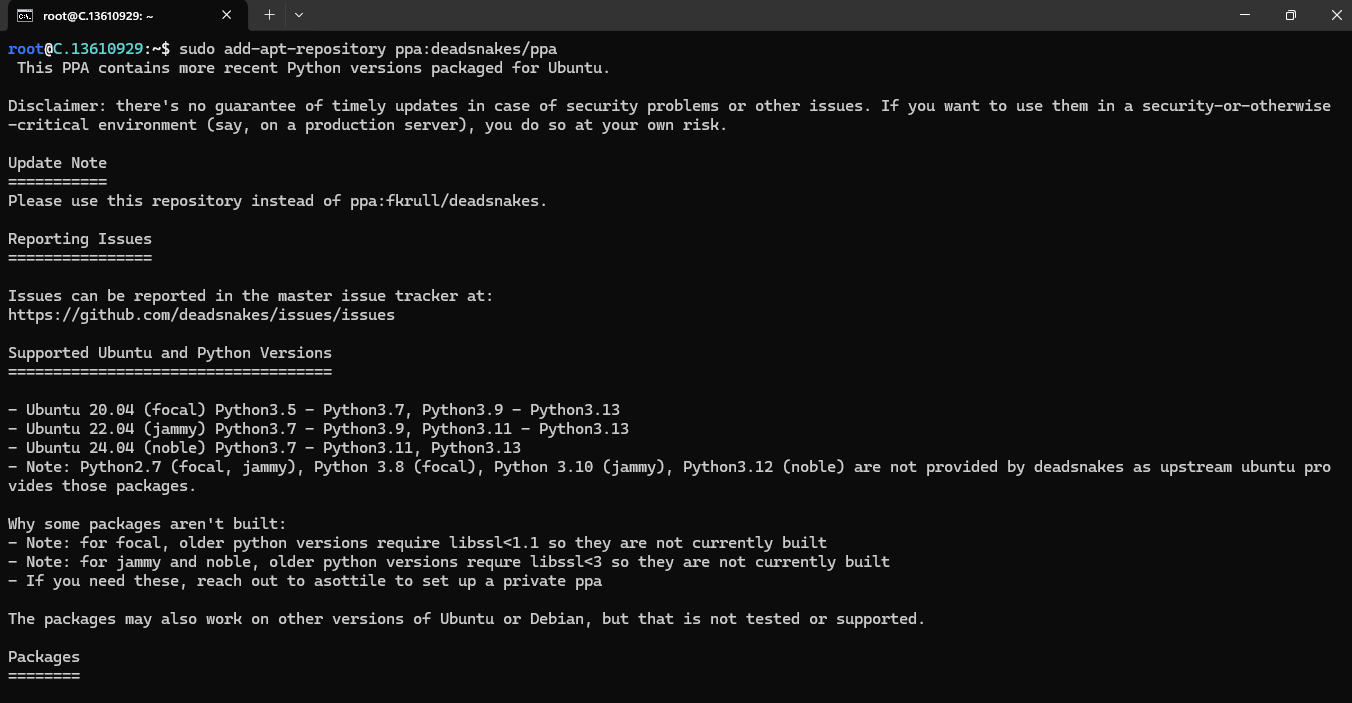

Run the following command to add the deadsnakes PPA (which maintains newer versions of Python):

sudo add-apt-repository ppa:deadsnakes/ppa

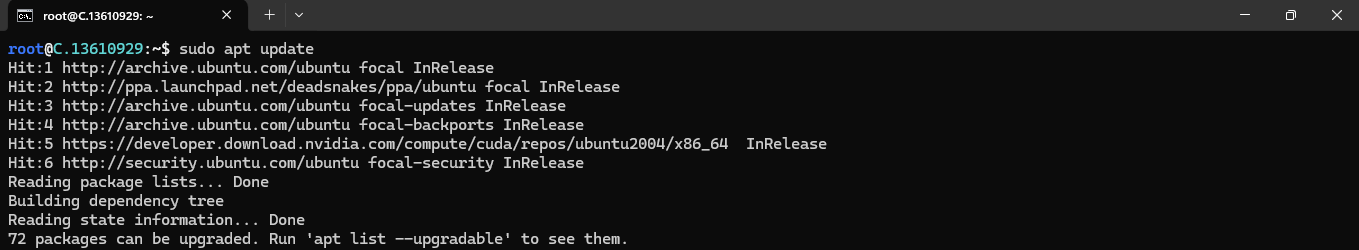

Run the following command to update the package list again:

sudo apt update

Then run the following command to install Python 3.11(or the latest stable version):

sudo apt install python3.11 python3-pip -y

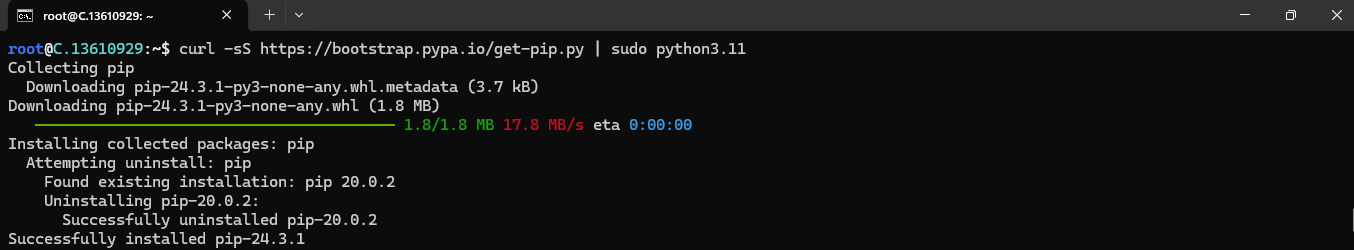

Run the following command to download and install pip specifically for Python 3.11:

curl -sS https://bootstrap.pypa.io/get-pip.py | sudo python3.11

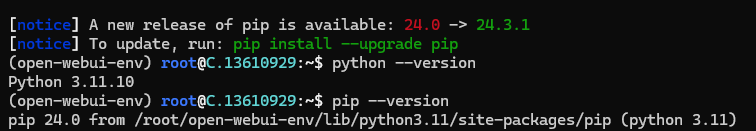

Step 9: Create a Virtual Environment

Run the following commands to create a virtual environment (Recommended):

This will isolate your Open WebUI installation from system-wide packages.

python3.11 -m venv open-webui-env

source open-webui-env/bin/activate

Then run the following command to check the pip and python version:

python --version

pip --versionEnsure Python 3.11 and Pip 24.0 is installed, as it’s compatible with Open WebUI.

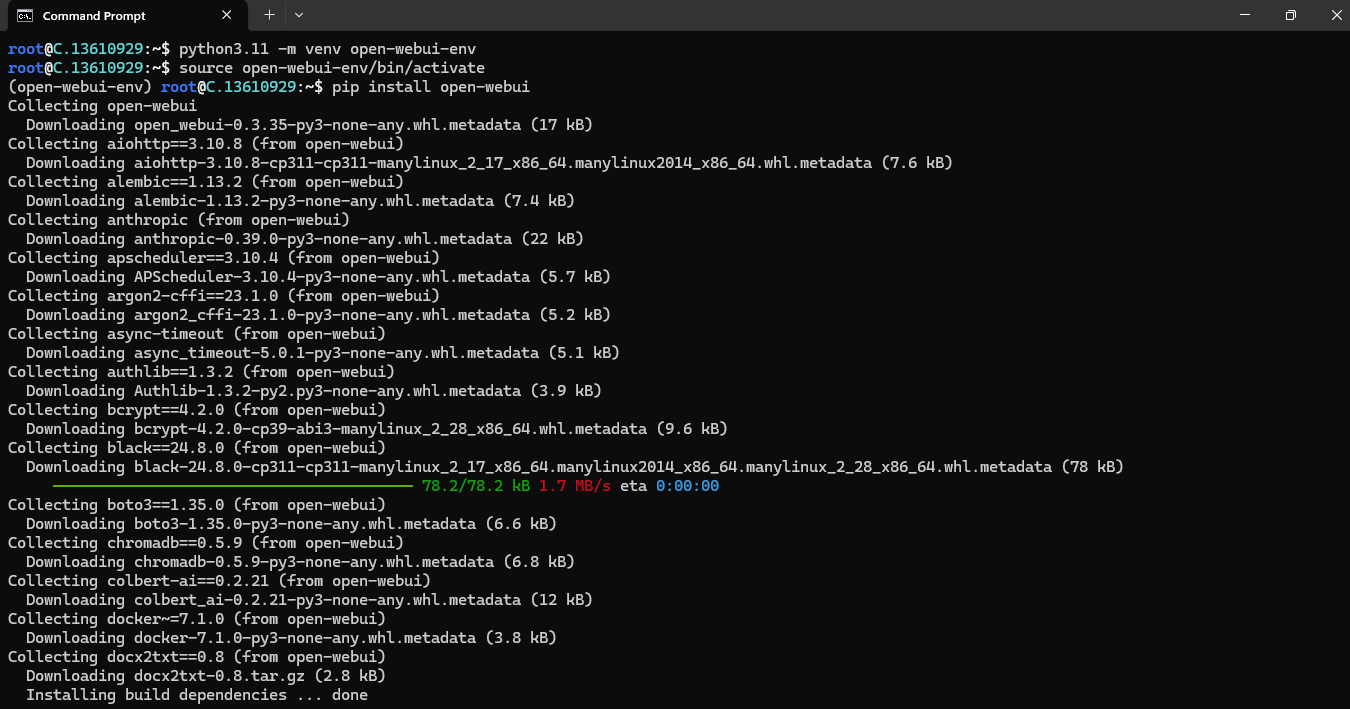

Step 10: Install Open WebUI

Run the following command to install Open WebUI:

pip install open-webui

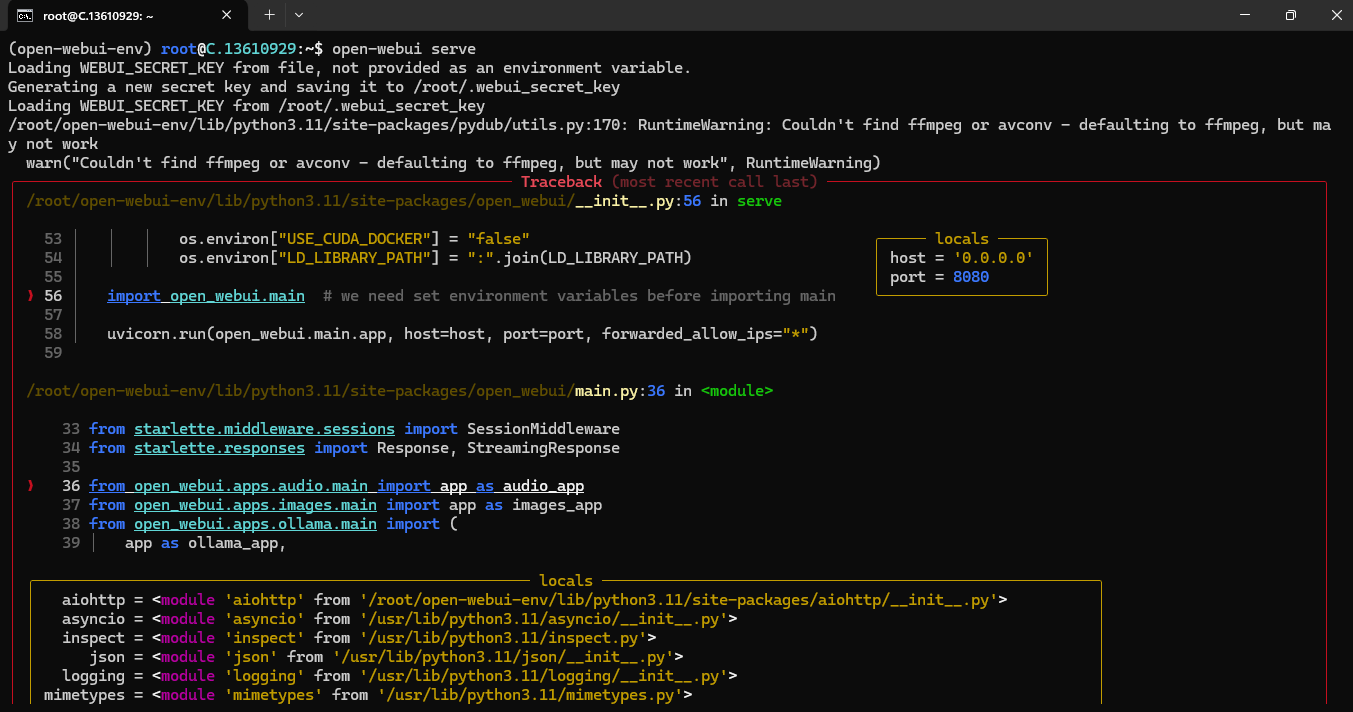

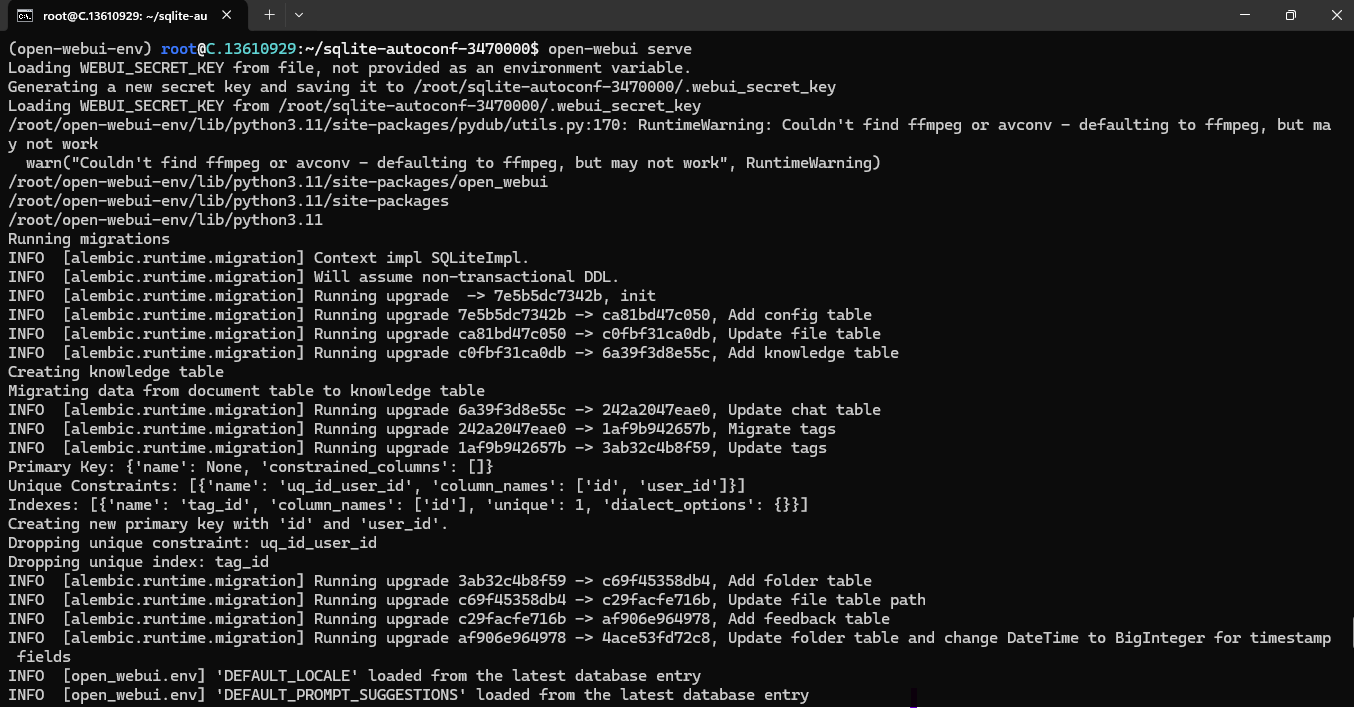

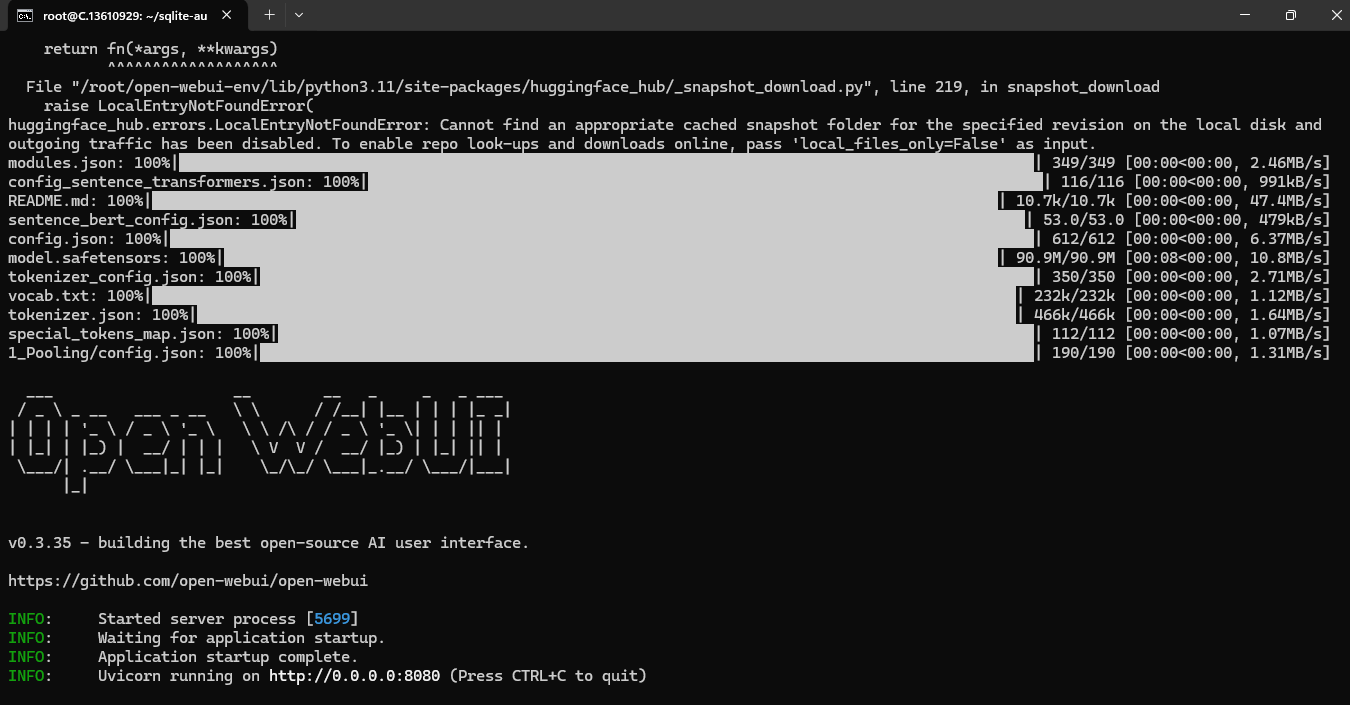

Step 11: Running Open WebUI

After installation, you can start Open WebUI by executing the following command:

open-webui serve

If this error occurs, it may be due to an outdated version of SQLite3 on your system, which is required by the chromadb package used within Open WebUI. Here’s how you can upgrade SQLite3 to resolve this:

Steps to Upgrade SQLite3

Install the Latest SQLite3 from Source:

First, download, compile, and install the latest SQLite version from the official source.

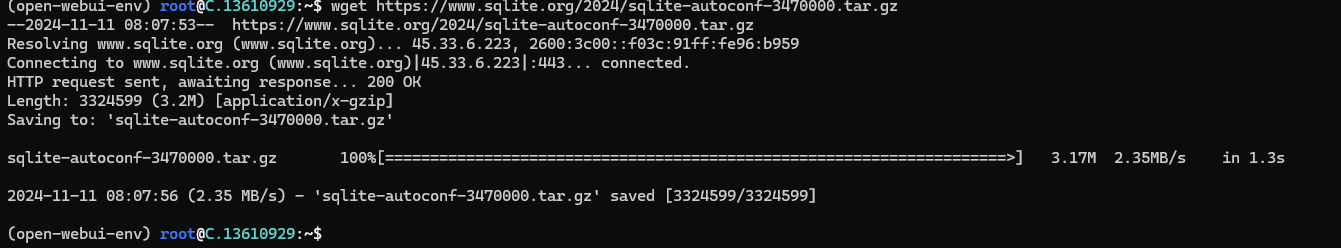

Run the following command to download version 3.47.0:

wget https://www.sqlite.org/2024/sqlite-autoconf-3470000.tar.gz

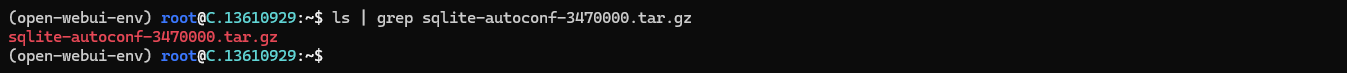

Verify the Download

After downloading, check if the file is in your current directory:

ls | grep sqlite-autoconf-3470000.tar.gz

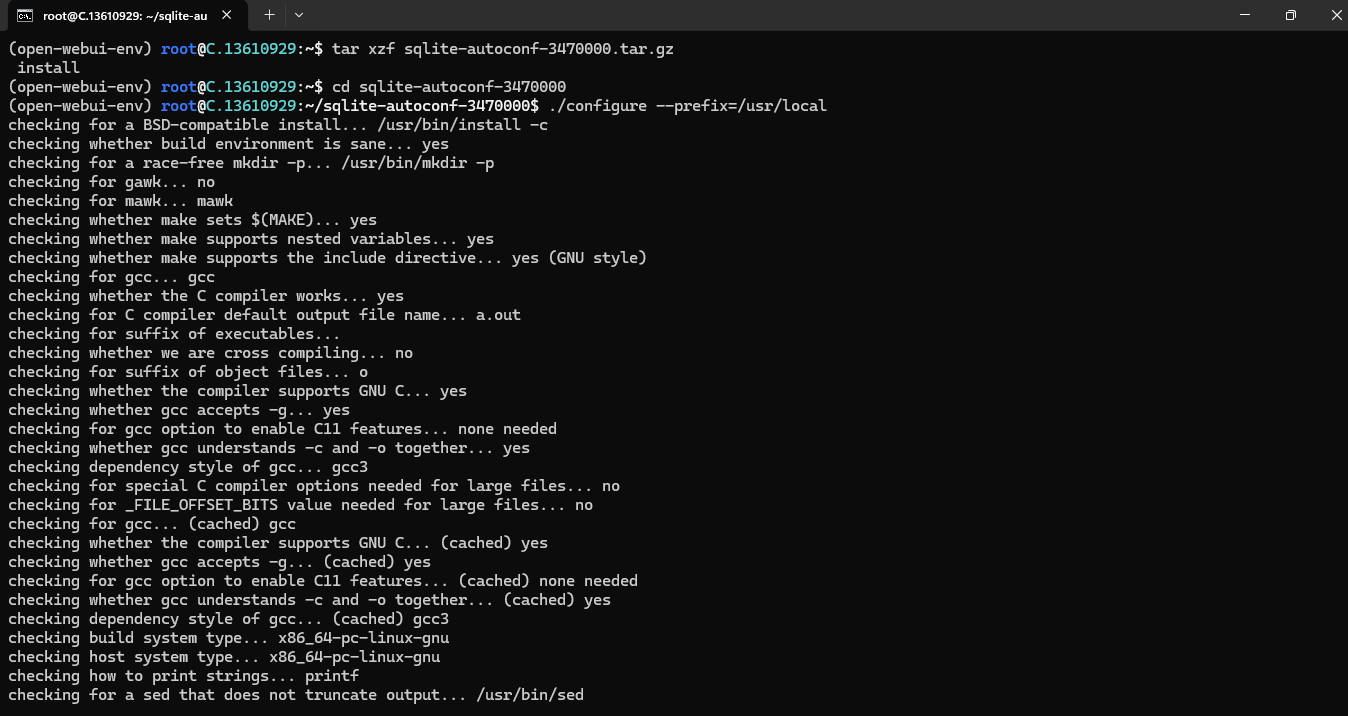

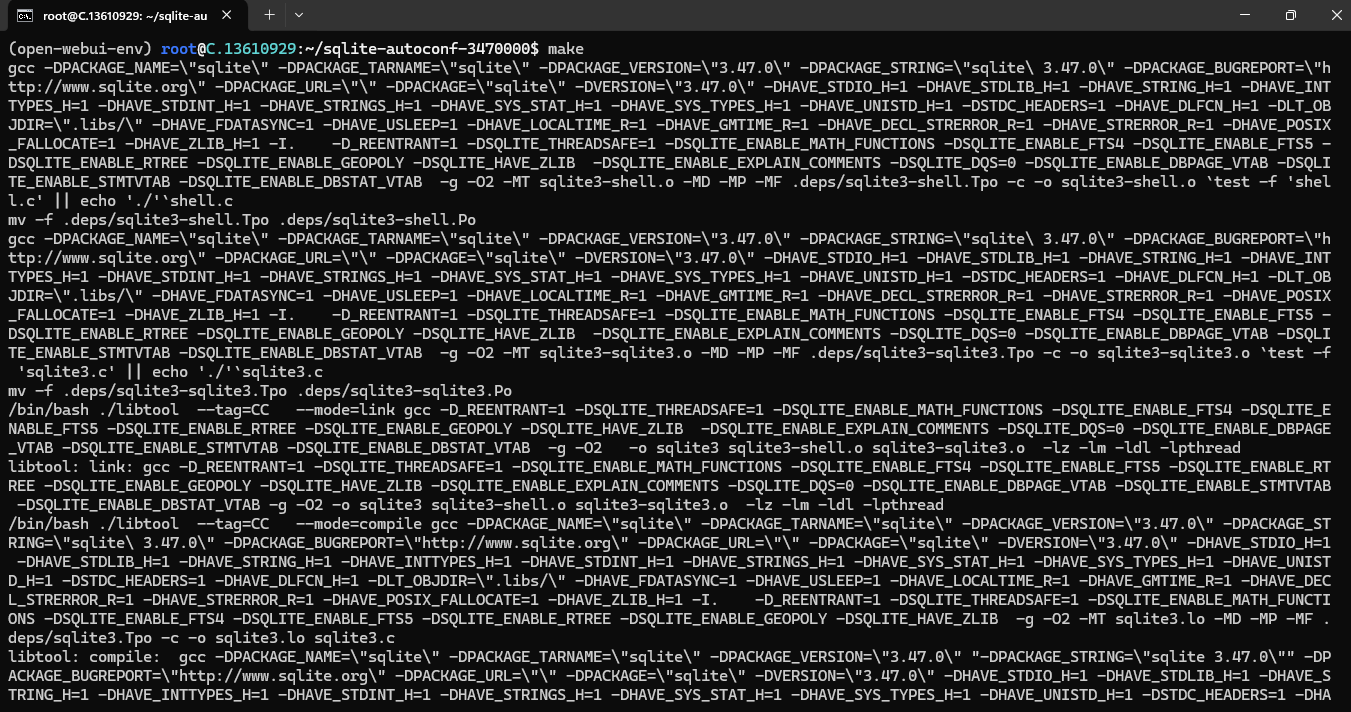

Extract, Compile, and Install SQLite

If the file is present, proceed with these commands:

tar xzf sqlite-autoconf-3470000.tar.gz

cd sqlite-autoconf-3470000

./configure --prefix=/usr/local

make

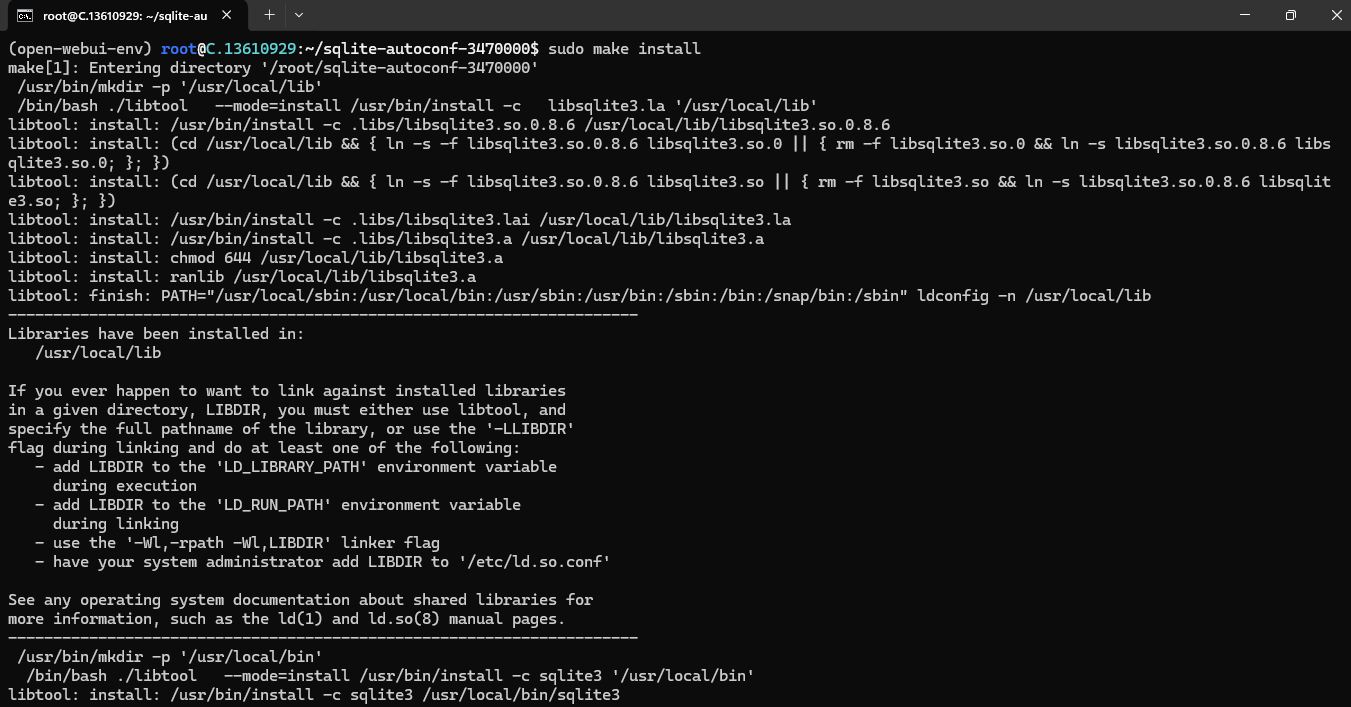

sudo make install

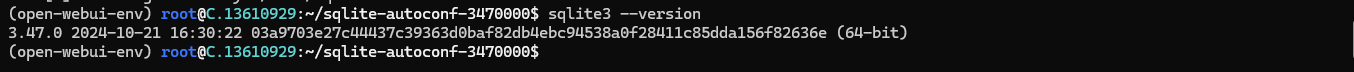

Verify Installation

After completing the installation, run the following command to confirm the version:

sqlite3 --version

Verify and Link SQLite Library Path

The newer version might not be in the default library path. Let's ensure it is correctly linked.

Update the Library Path

Run the following command to add the new SQLite location to your library path:

export LD_LIBRARY_PATH=/usr/local/lib:$LD_LIBRARY_PATH

Run the following command to Create a symbolic link to the new SQLite library:

sudo ln -sf /usr/local/lib/libsqlite3.so.0 /usr/lib/libsqlite3.so.0

Now again run the following command to start the Open WebUI Server:

open-webui serve

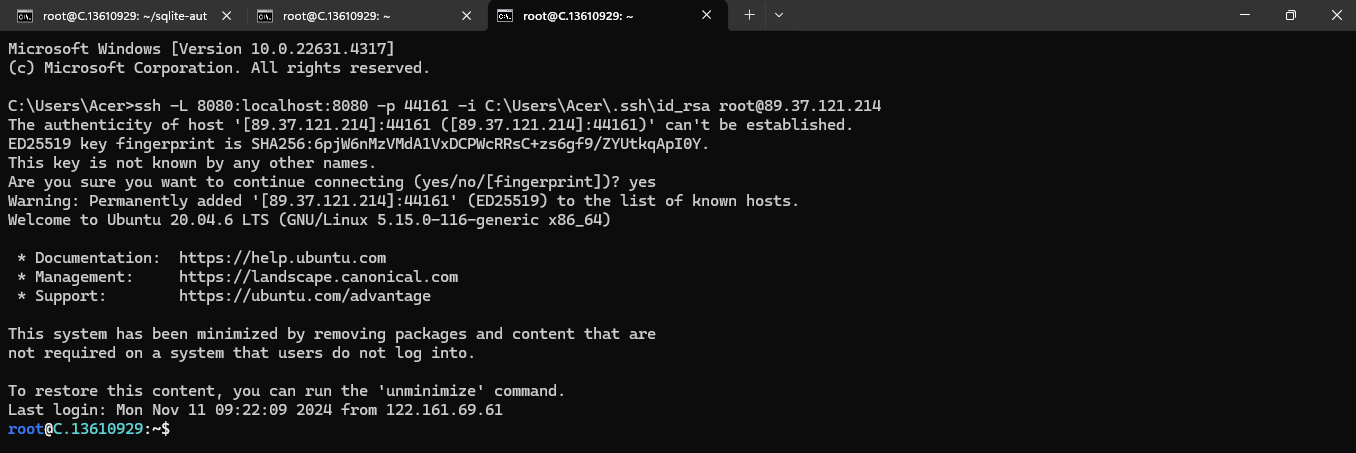

Step 12: Access VM with port forwarding and tunneling

To forward local port 8080 on your windows machine to port 8080 on the VM, use the following command in command prompt or powerShell:

ssh -L 8080:localhost:8080 -p 44161 -i C:\Users\Acer\.ssh\id_rsa root@89.37.121.214

Explanation of Parameters

-L 8080:localhost:8080: Maps local port8080on your machine to port8080on the VM.-p 44161: Specifies the custom SSH port for your VM.-i C:\Users\Acer\.ssh\id_rsa: Path to your private SSH key.root@89.37.121.214: The username (root) and IP address of your VM.

How to Access

After running this command, you should be able to access Open WebUI by visiting http://localhost:8080 or http://127.0.0.1:8080/

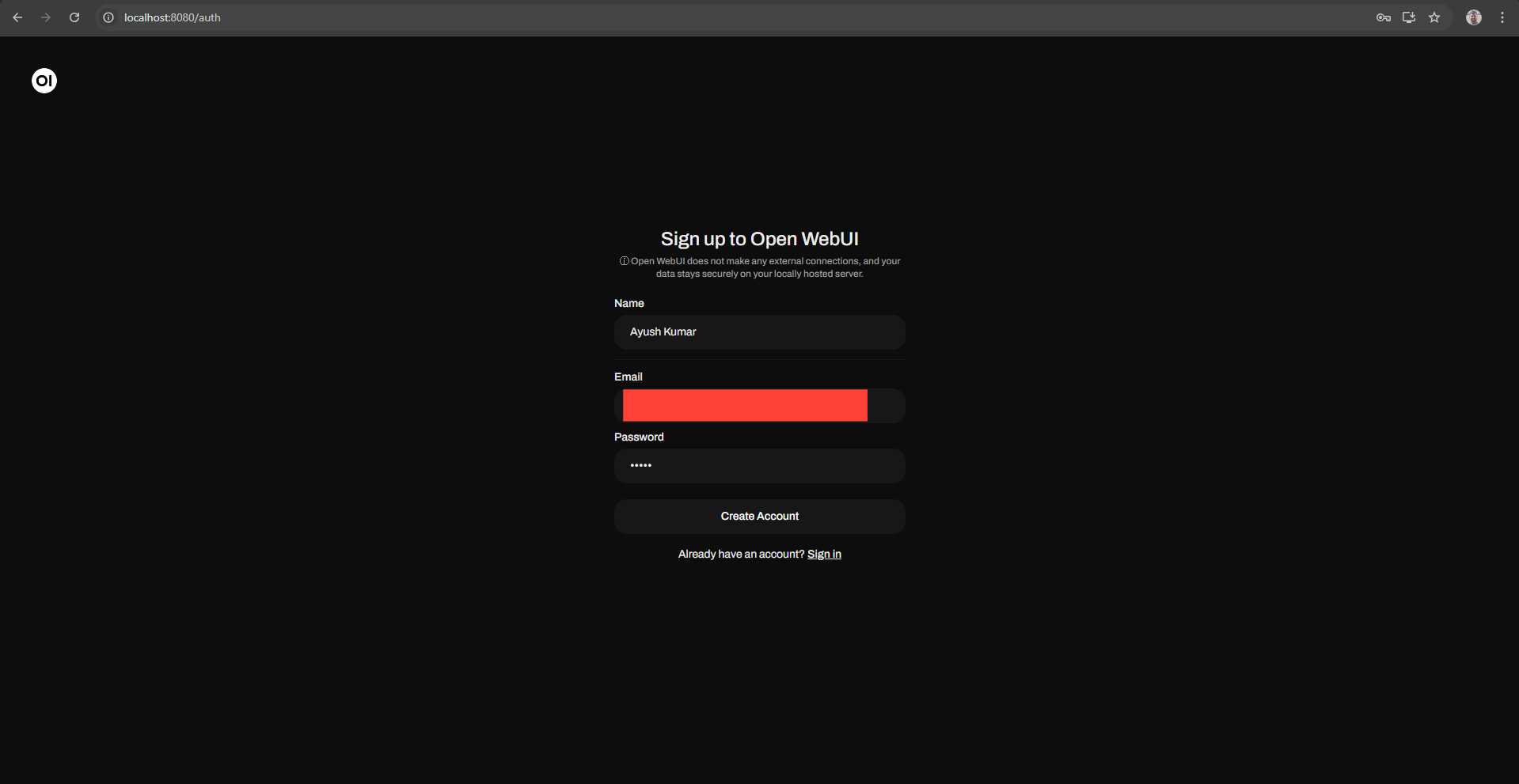

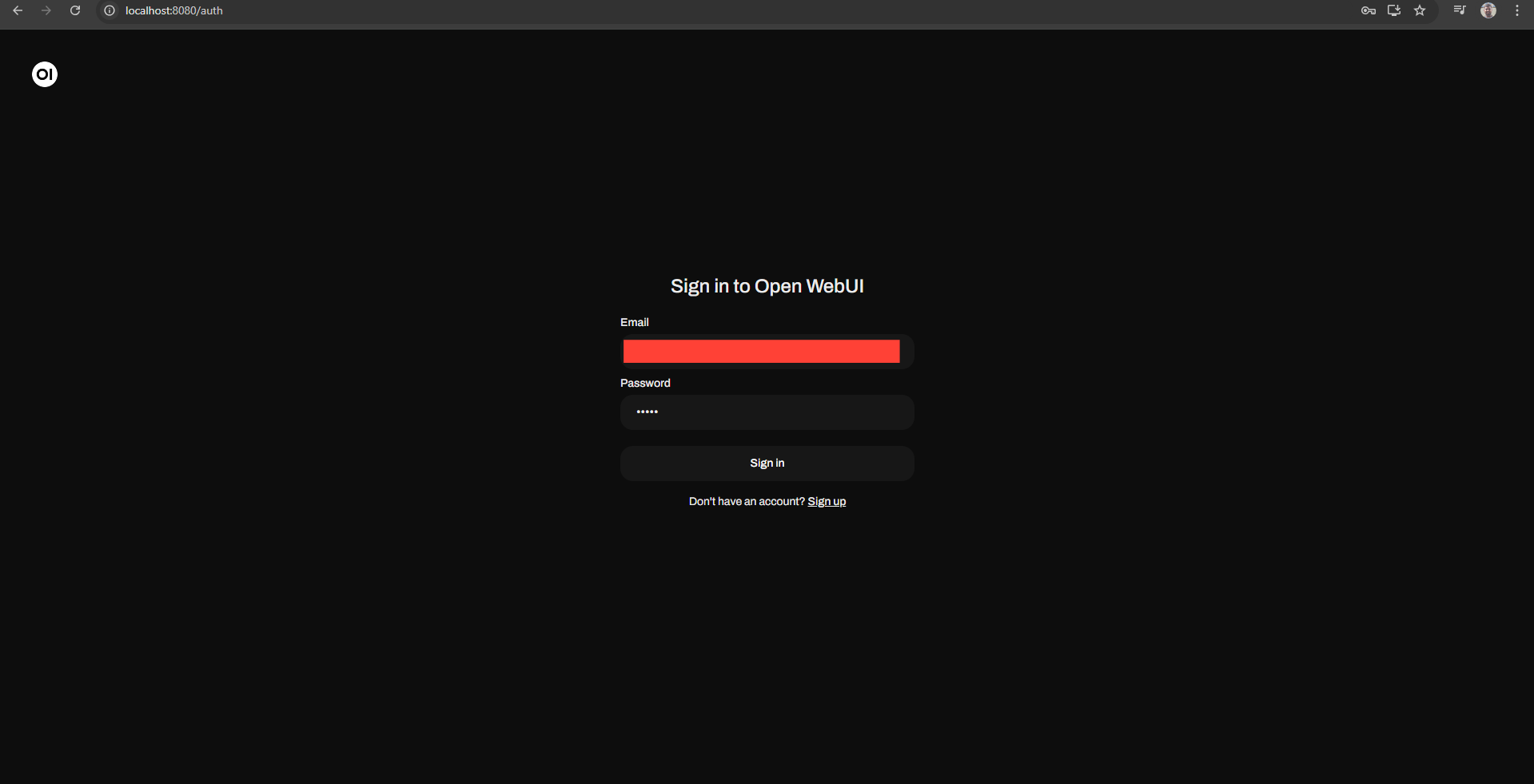

Step 13: Logging In and Creating an Account

- After installation, access Open WebUI by navigating to

http://localhost:8080 or http://127.0.0.1:8080/in your web browser.

Creating an Account:

- Click on the Sign Up option on the login page.

- Fill in your details:

- Username: Choose a unique username.

- Email: You can use any email address since all data is stored locally.

- Password: Create a secure password.

- After submitting, your account will be marked as pending until approved by an administrator.

Admin Approval:

- The first account created during the installation has administrator privileges. Log in with this account to approve new user registrations.

- Navigate to the users section in the admin panel to change the status of pending accounts to active.

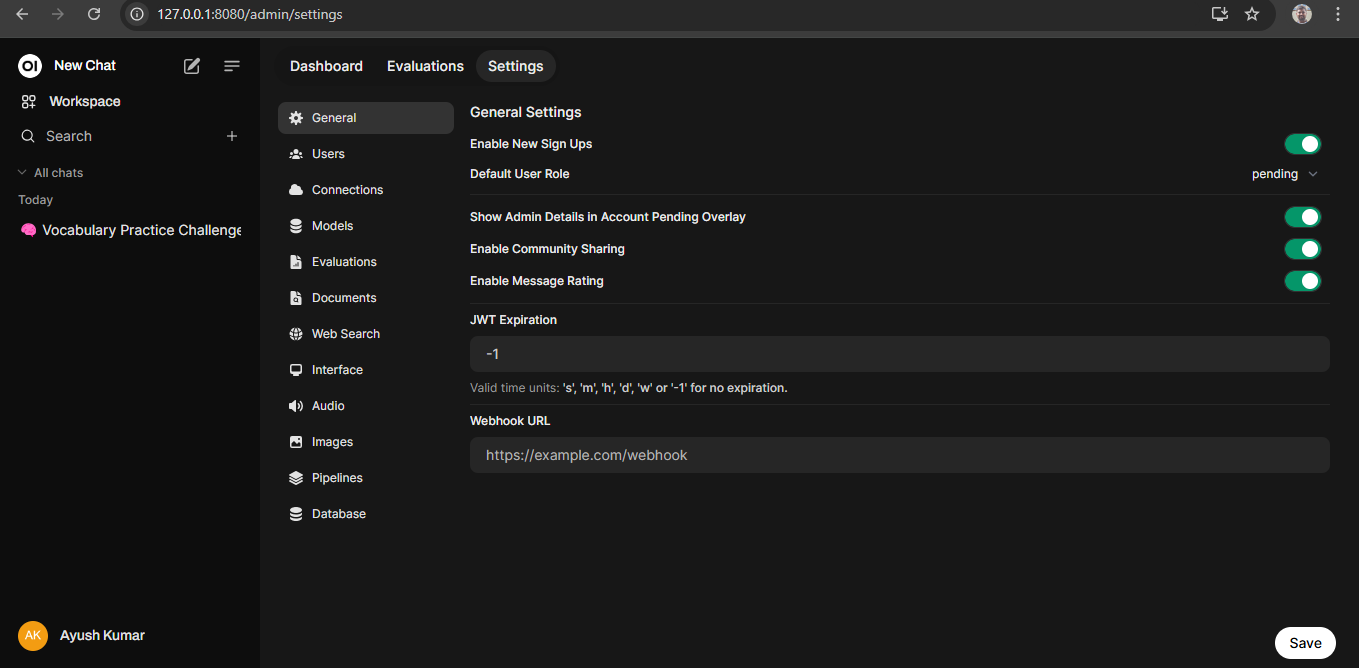

Step 14: Additional Settings

Once logged in as an administrator, you can configure various settings:

User Management:

- In the admin panel, you can manage user roles (admin or regular user) and approve or deny pending accounts.

Privacy Settings:

- All data, including login details and user information, is stored locally to ensure privacy and security.

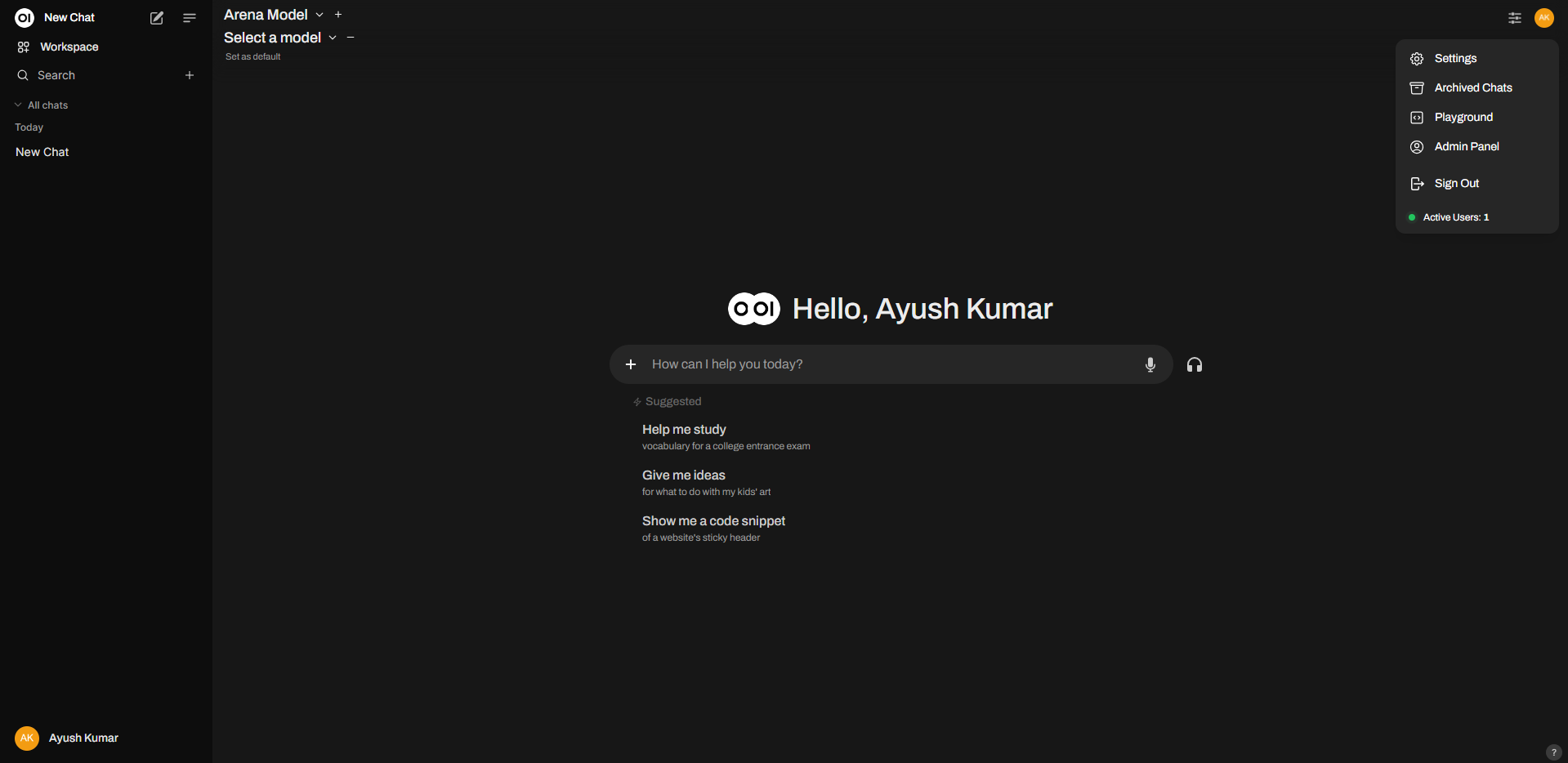

Step 15: Accessing the Admin Panel

To access the admin panel:

- Click on your username in the top-right corner and select Admin Panel from the dropdown menu.

- Here, you will find options to manage users, connections, models, and other system settings.

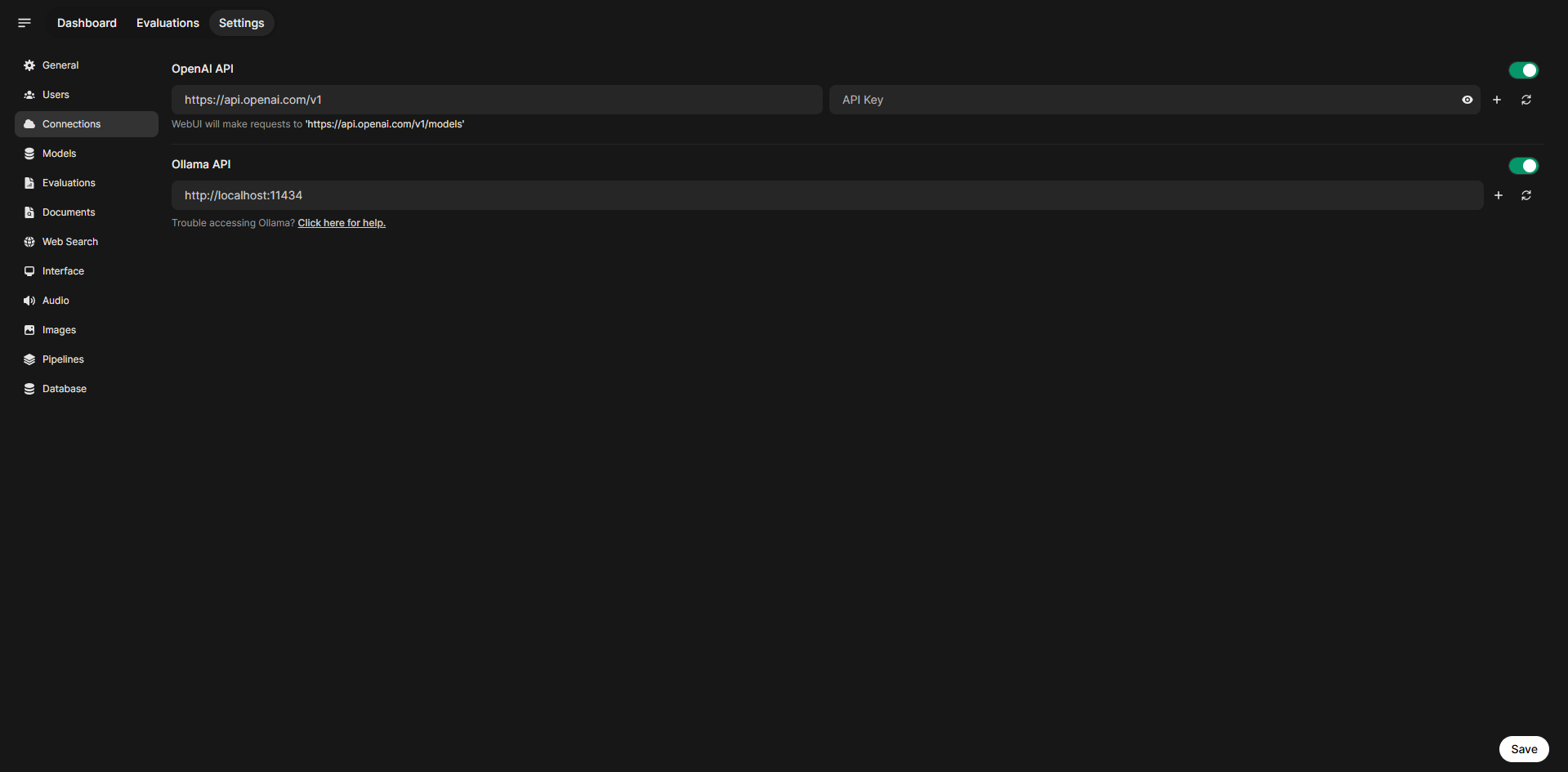

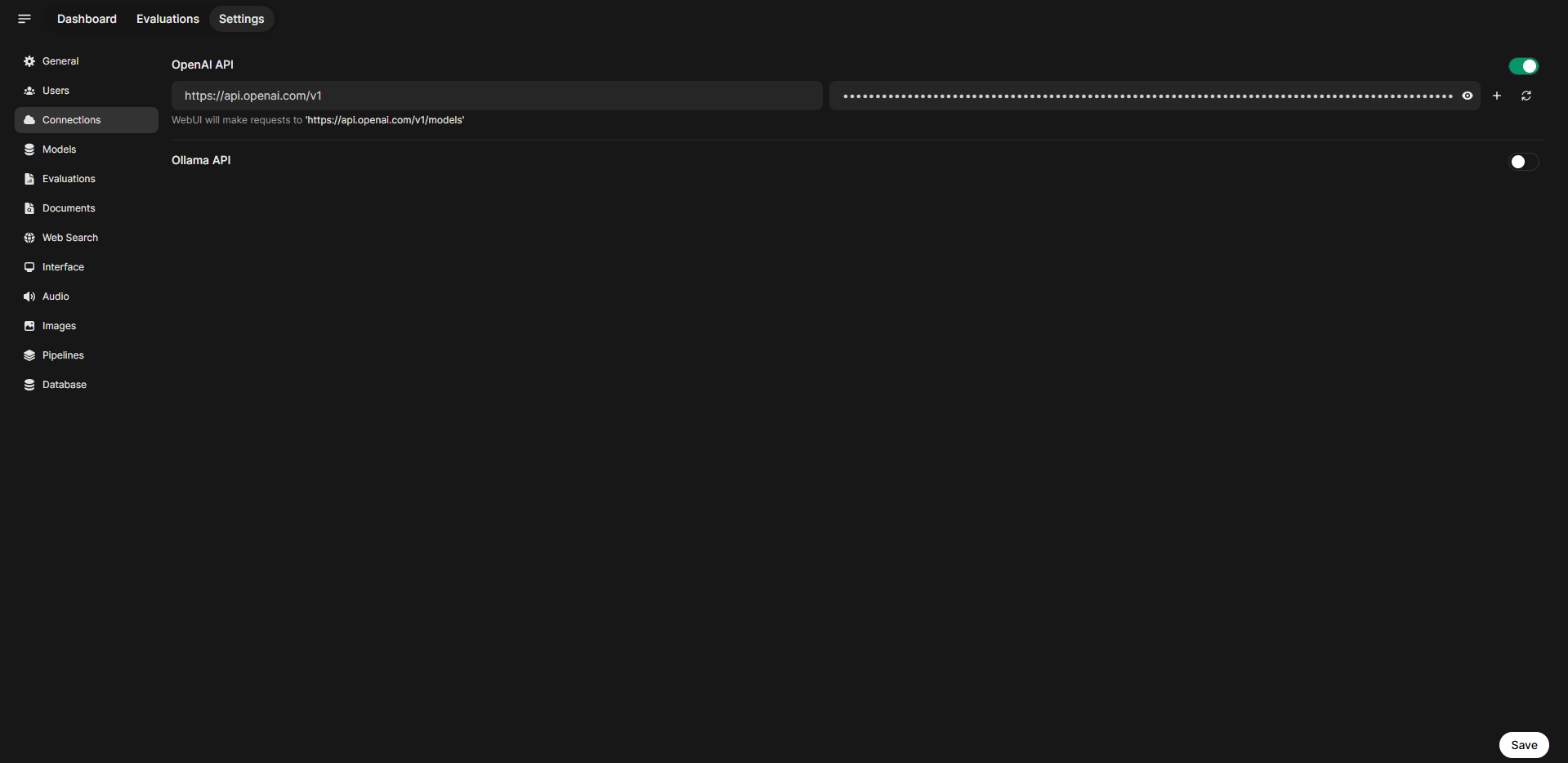

Step 16: Connection Options Available

Open WebUI supports multiple connection options:

- OpenAI: Connects directly to OpenAI's API for model interactions.

- Ollama: If you have ollama installed locally or on a different server, you can configure it by setting the

OLLAMA_BASE_URLin your docker command or environment variables.

Step 17: Adding OpenAI API Keys

To integrate OpenAI services:

- In the admin panel, navigate to the connections tab.

- Locate the section for adding API keys.

- Enter your OpenAI API key in the designated field.

- Save your changes to establish a connection with OpenAI services.

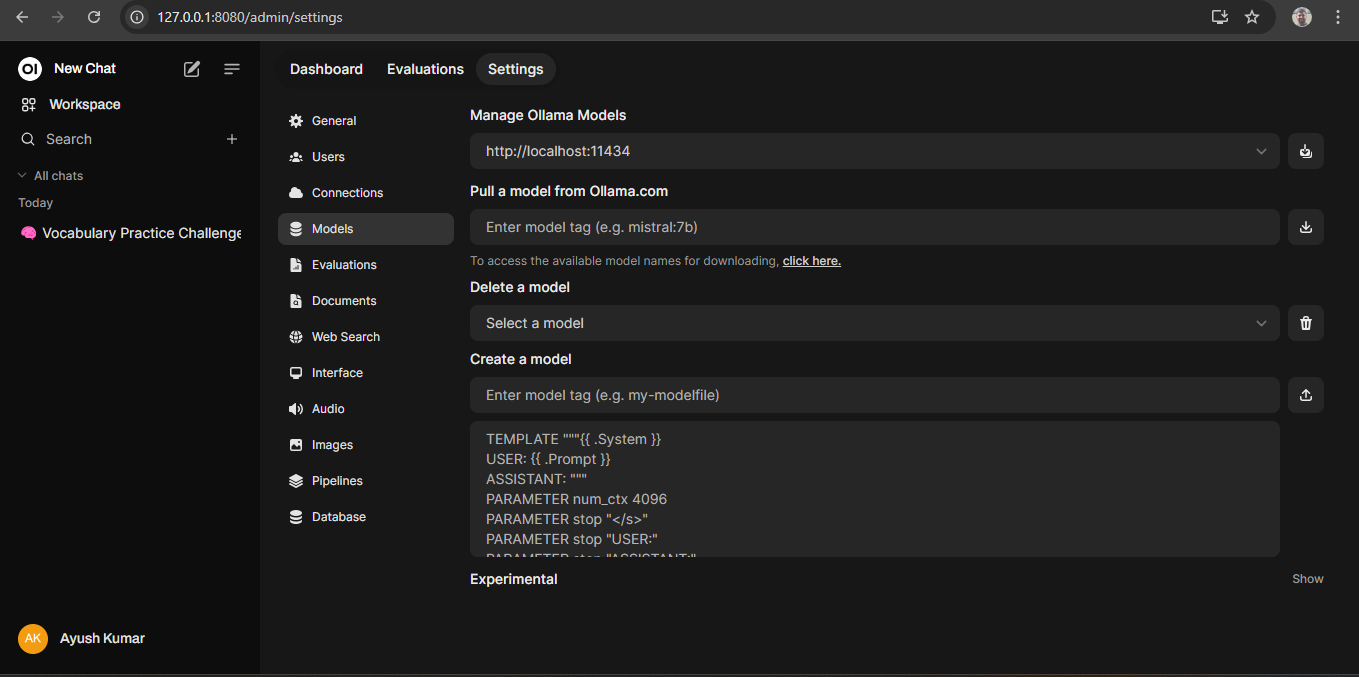

Step 18: Model Options

Under the models tab in the admin panel:

- You can select which models are available for users to interact with.

- Options include various models from OpenAI as well as those provided by ollama.

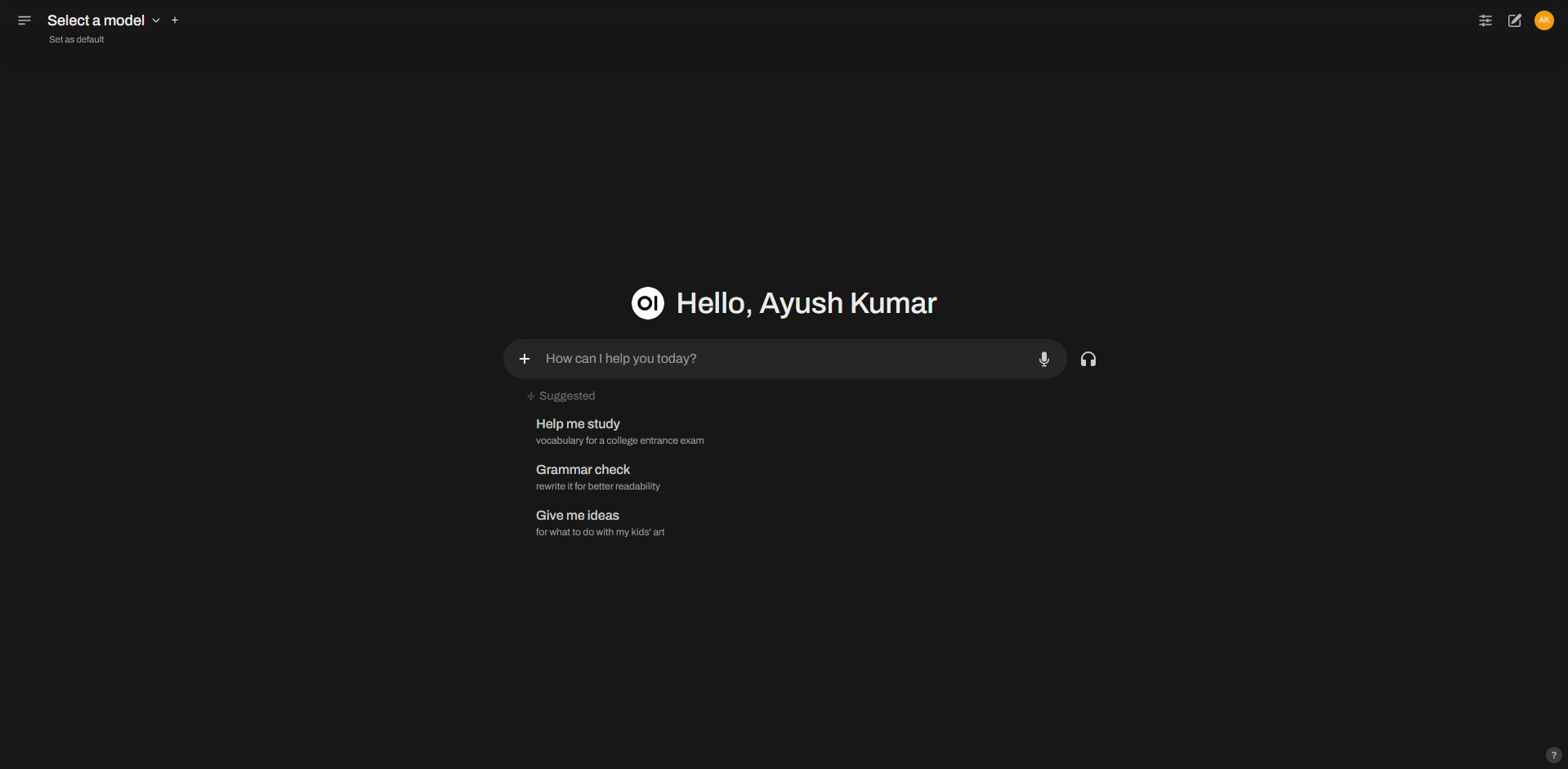

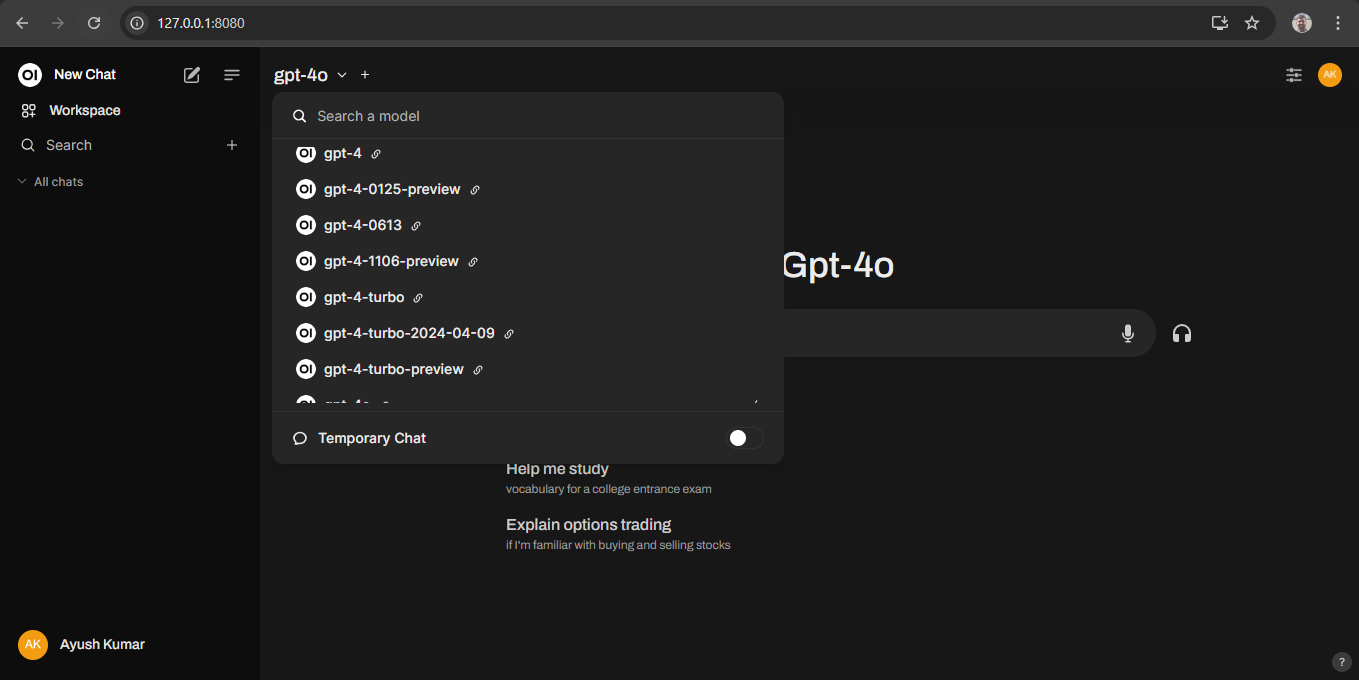

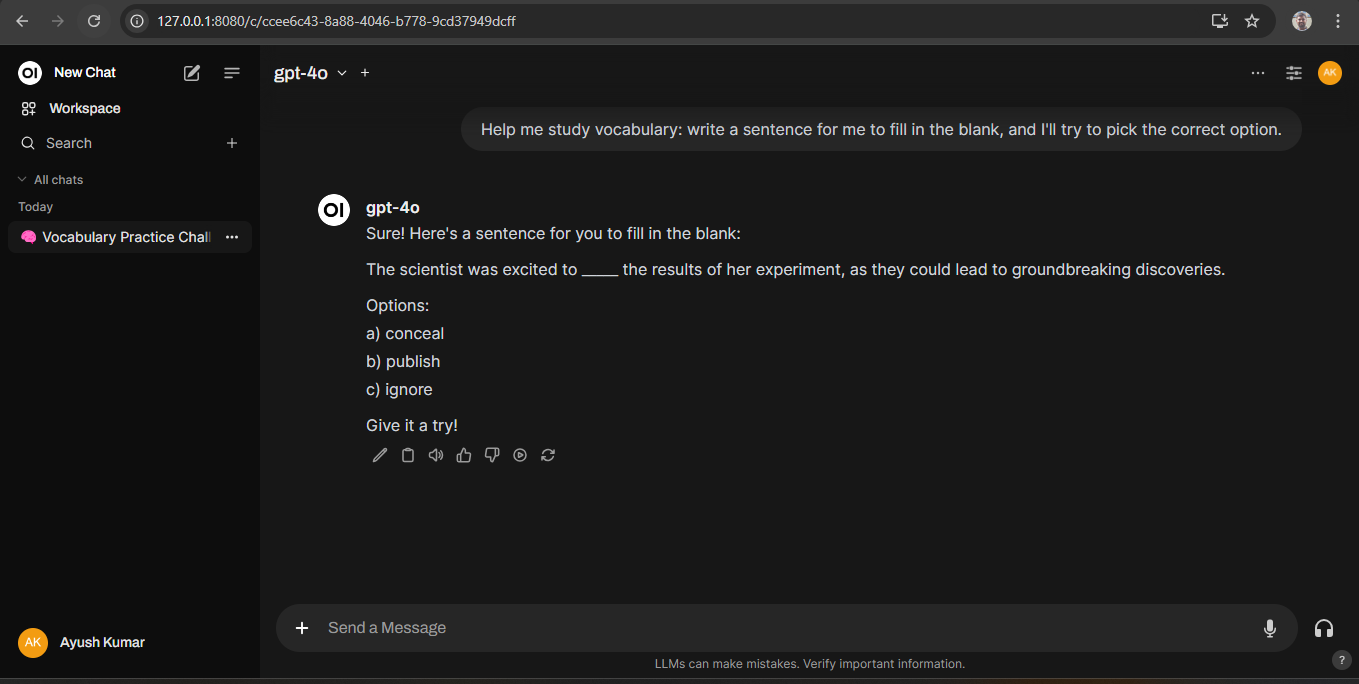

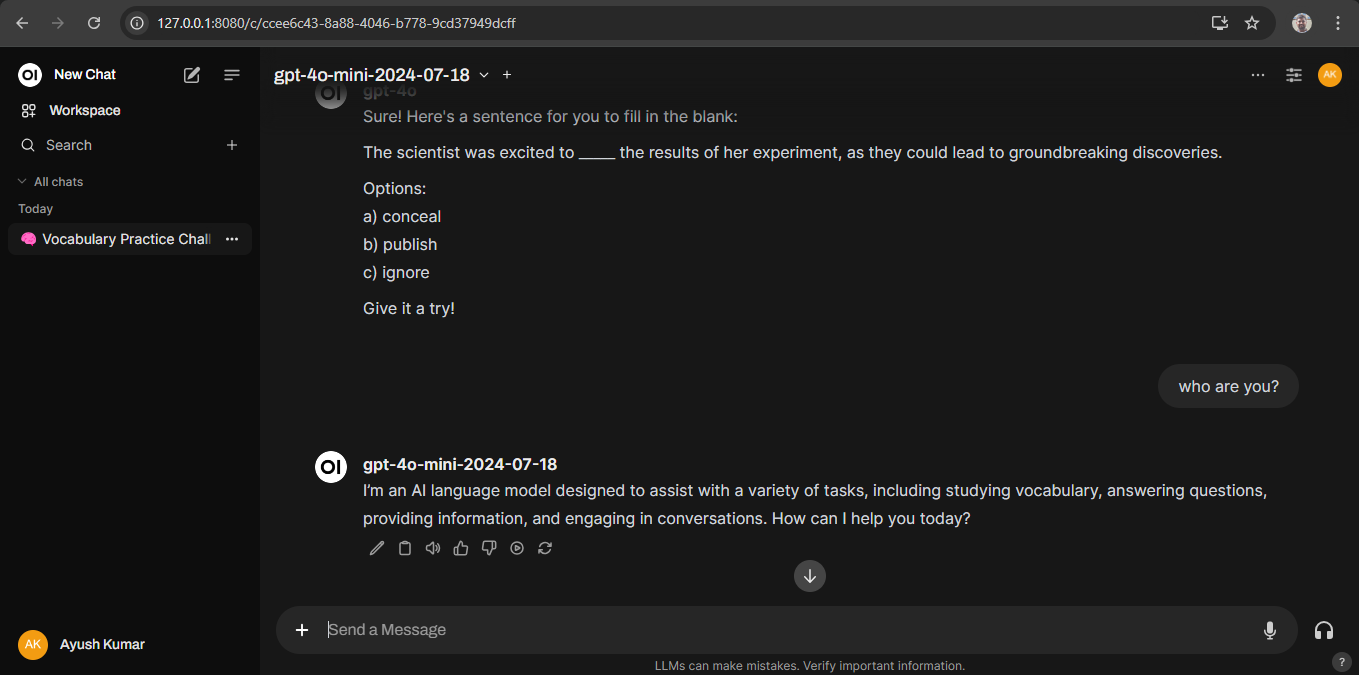

Step 19: Accessing the Chat Interface

After successfully adding your OpenAI or ollama API keys and loading the models, you can begin interacting with them through Open WebUI.

From the main dashboard of Open WebUI, locate and click on the chat or models tab. This will take you to the interface where you can interact with the loaded models.

Conclusion

In this guide, we explain the Open WebUI a powerful and adaptable platform for managing and interacting with language models, whether on a high-performance GPU or a budget-friendly CPU virtual machine and provide a step-by-step tutorial on setting Open WebUI locally on a NodeShift virtual machine. You'll learn how to install the required software, set up essential tools like SQLite, accessing the admin panel, create and adding OpenAI key and run the chat interface in browser.

For more information about NodeShift: